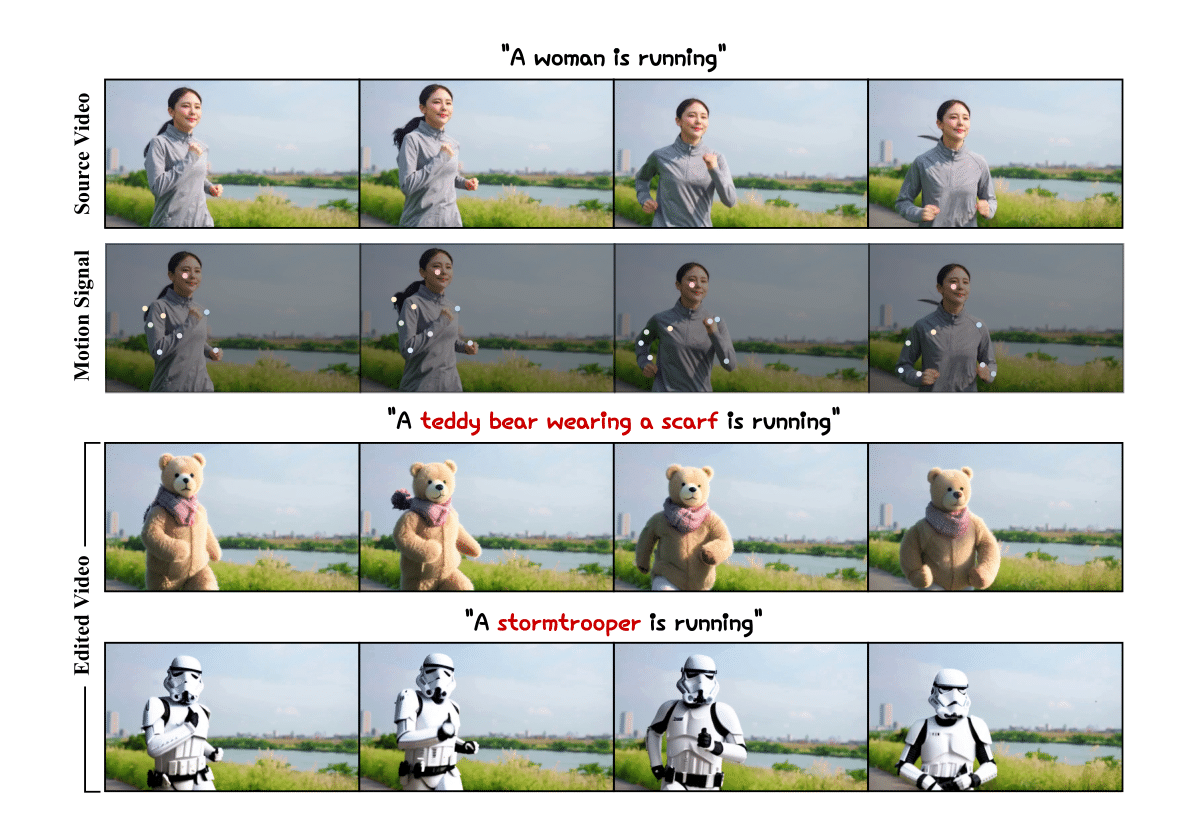

Point-to-Point: Sparse Motion Guidance for Controllable Video Editing

We enhanced point-based video editing by introducing a new motion representation that leverages the priors of a video diffusion model.

I am a Ph.D student at Seoul National University, under the supervision of Prof. Nojun Kwak.

My primary focus is on video & image generation, aiming to push the boundaries of their applications in real-world scenarios. Specifically, developing generative models that provide more diverse experiences to users is my central goal. My research interests also include a broader computer vision area, with experience spanning diffusion, video rendering, segmentation, and 3D object detection.

We enhanced point-based video editing by introducing a new motion representation that leverages the priors of a video diffusion model.

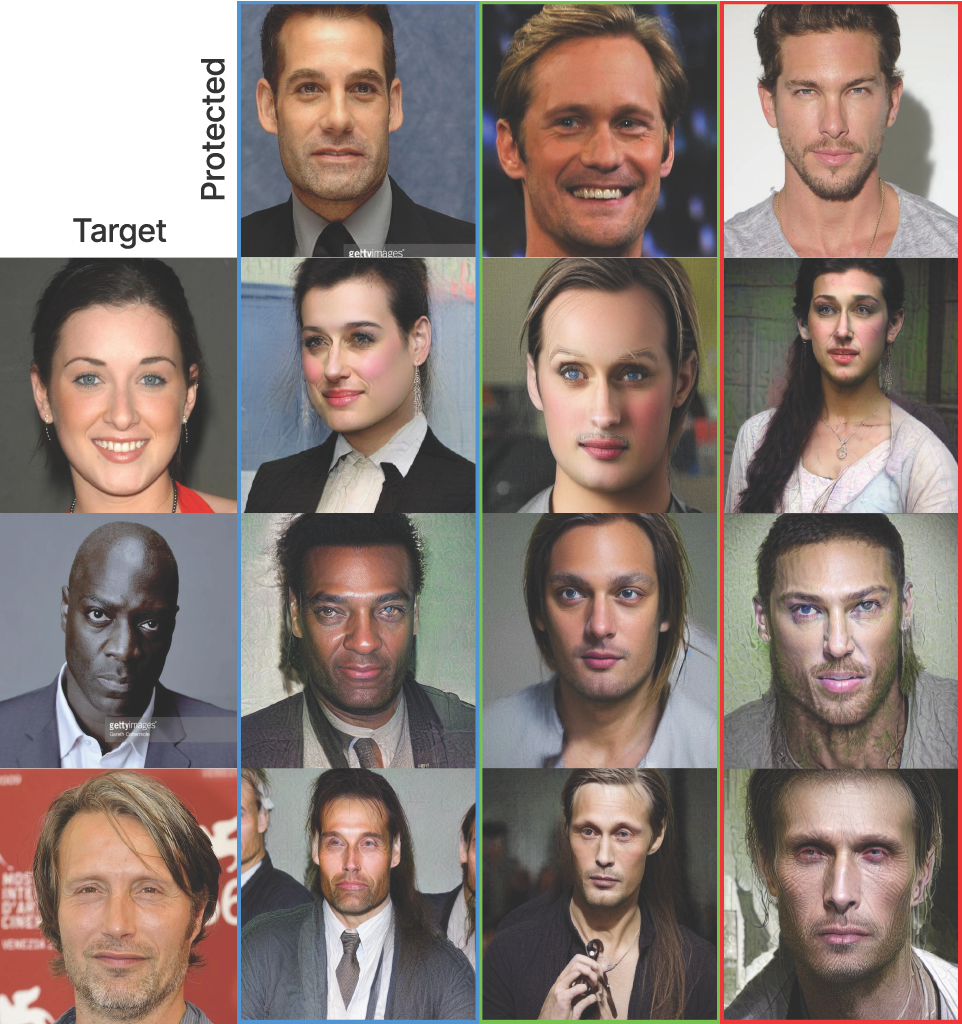

We prevented unauthorized T2I customization by guiding the generated image to show the intended target.

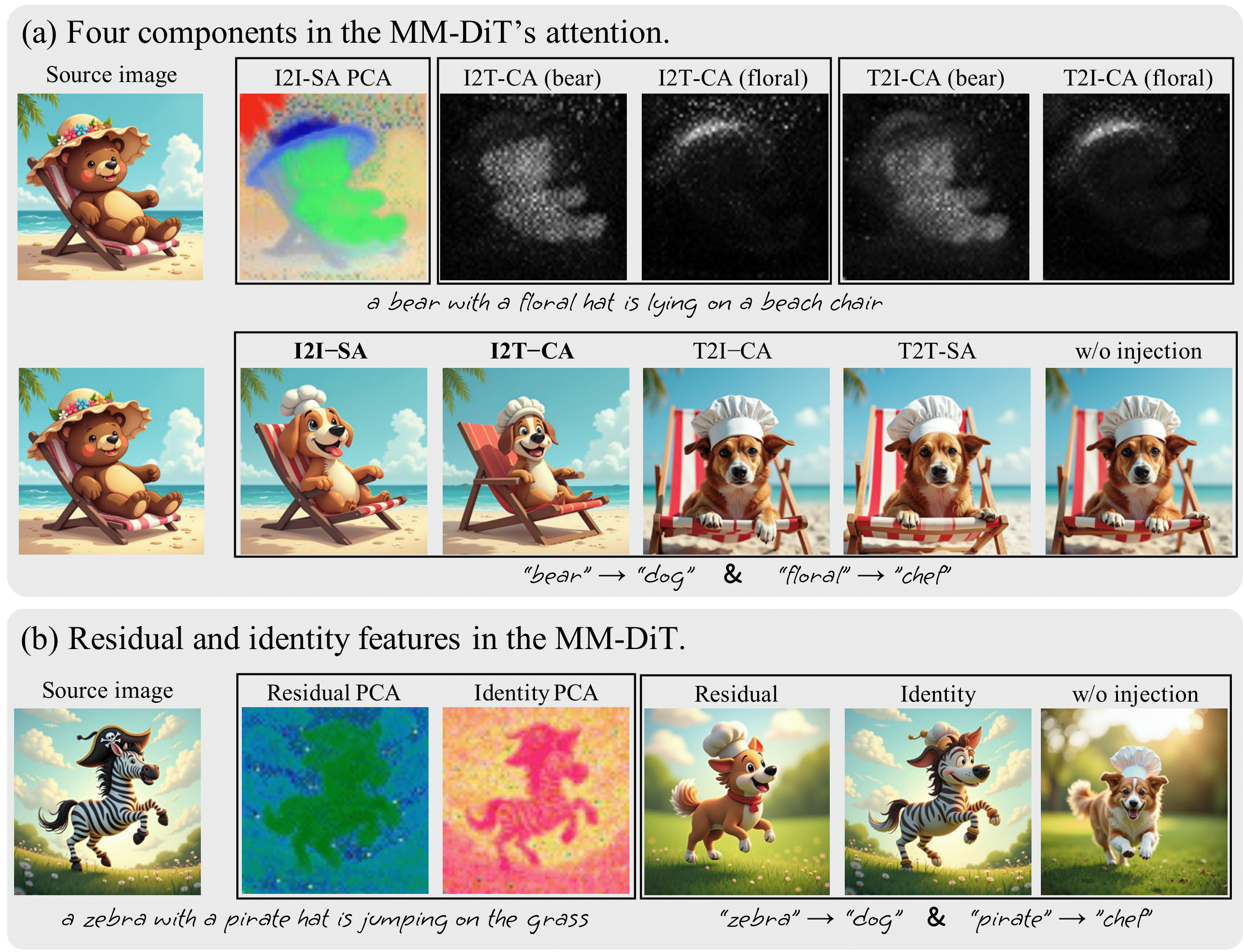

We developed a new real-image editing approach for FLUX, leveraging analysis of intermediate representations.ReFlex achieved text alignment improvements of 1.69-7.11% on PIE-Bench and 3.21-16.46% on Wild-TI2I-Real.

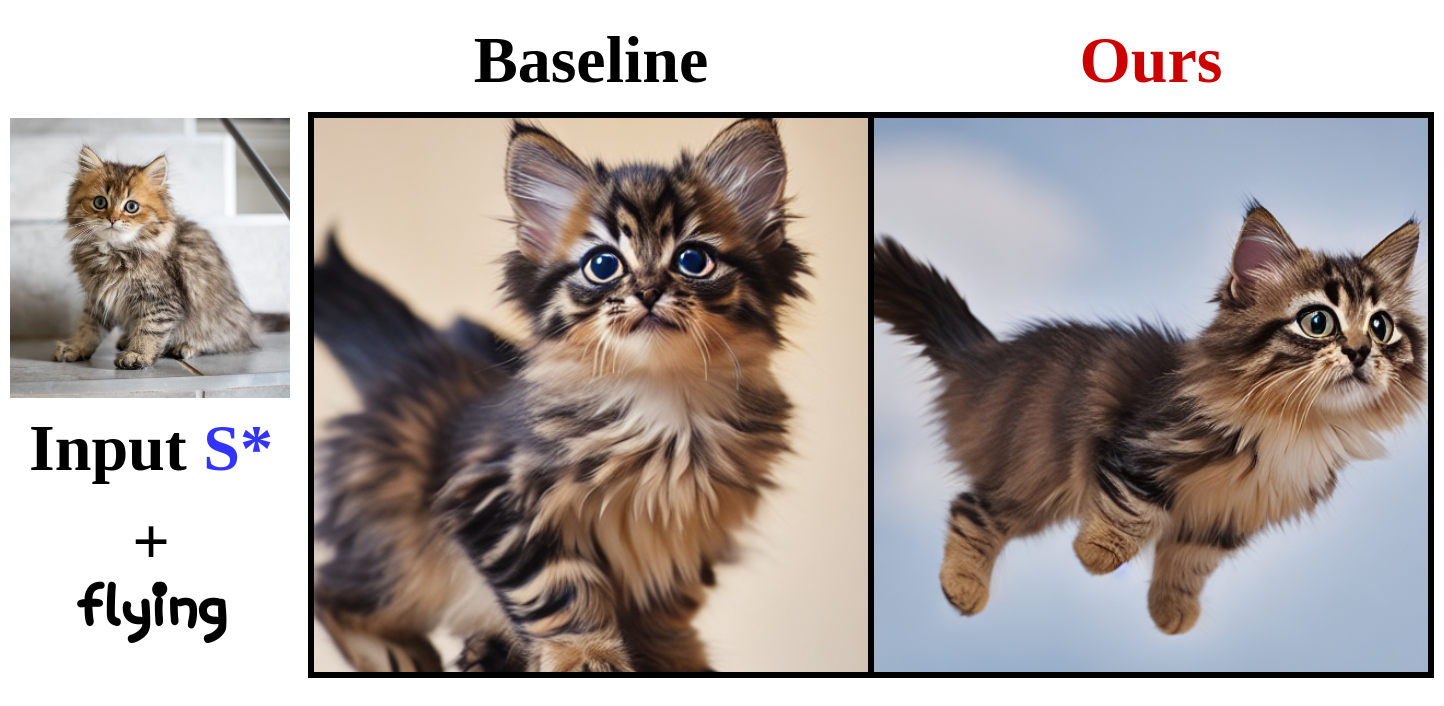

We discovered a conflict among contextual embeddings in zero-shot T2I customization.By resolving the conflict, we improved text alignment by 3.82-5.26% and image alignment by 2.11-12.2%.

We expanded video editing capabilities to enable subject replacement across diverse body structures.We introduced a new motion token, resulting in text alignment improvements of up to 7.66%.

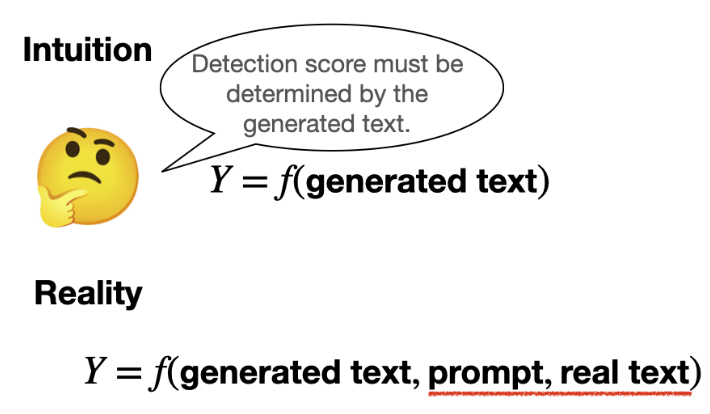

We showed the existence of backdoor path that confounds the relationships between text and machine text detection score.

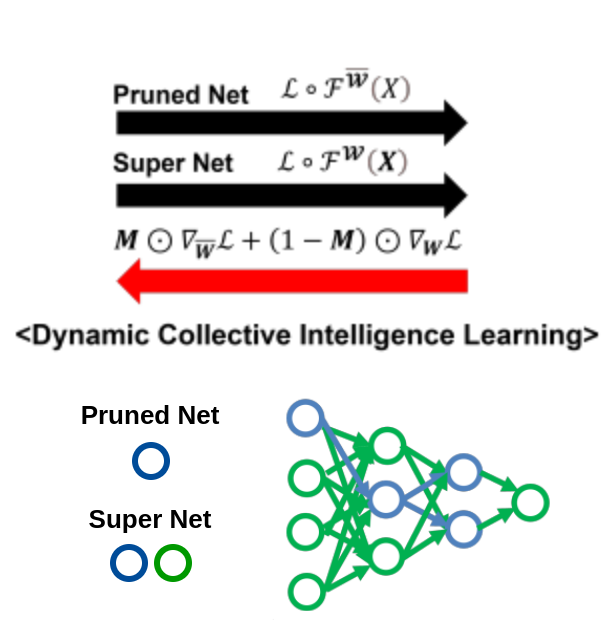

We employed refined gradients to update the pruning weights, enhancing both training stability and the model performance.

In video scene rendering, we reformulate neural radiance fields to additionally consider consistency fields, enabling more efficient and controllable scene manipulation.

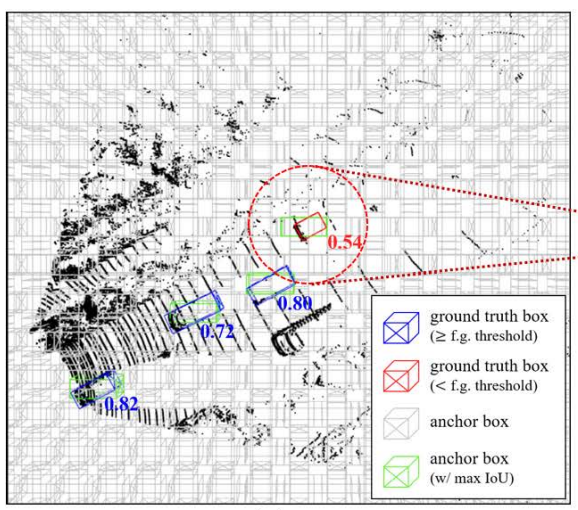

We utilize the Gaussian Mixture Model (GMM) in the 3D object detection task to predict the distribution of 3D bounding boxes, eliminating the need for laborious, hand-crafted anchor design.

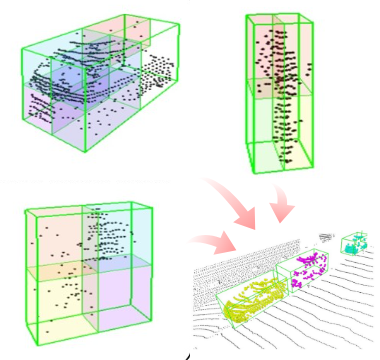

We delve into data augmentation in 3D object detection, leveraging sophisticated and rich structural information present in 3D labels.