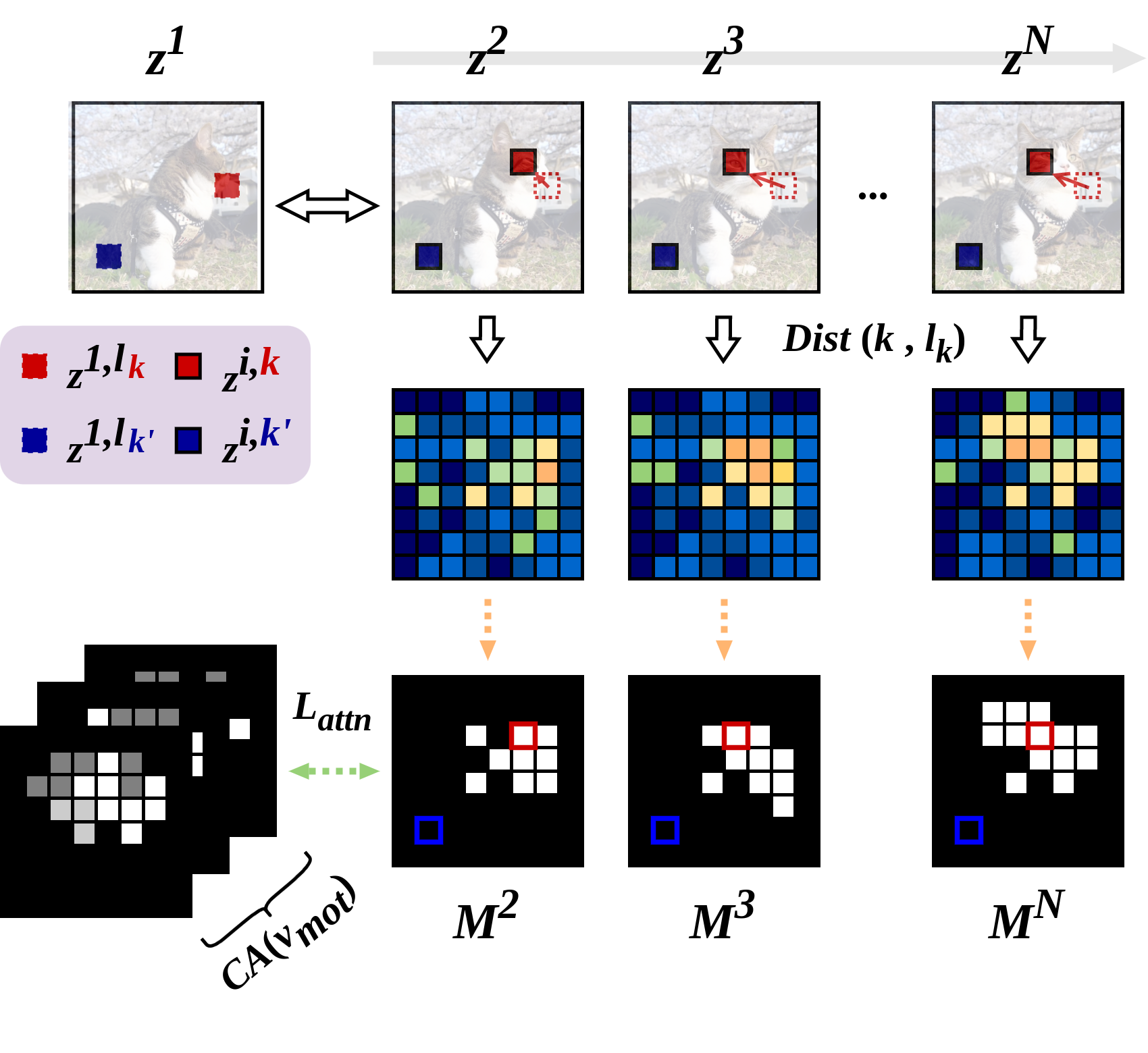

Inflated Text Embedding1

We expand the textual embedding space of a motion word to represent a time flow in videos rather than a frozen moment in images: we add a temporal axis to an embedding space of our new motion word Smot and let Smot inject its information into a proper region in each frame.

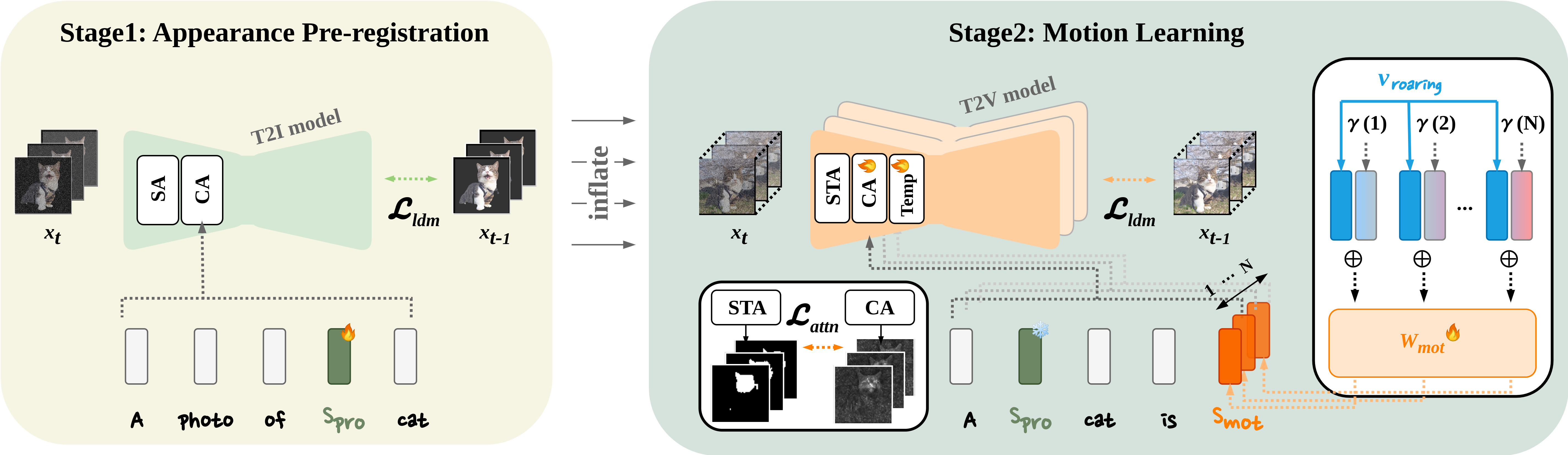

Pre-registration Strategy3

The motion and the protagonist get easily entangled. To resolve this problem, we propose a two-stage training strategy to untangle the two properties. We newly define a pseudo-word Spro that represents the appearance and texture features of the protagonist. As the protagonist and its appearances are already registered in the text encoder, Smot can be effectively learned using disentangled motion information for the video.