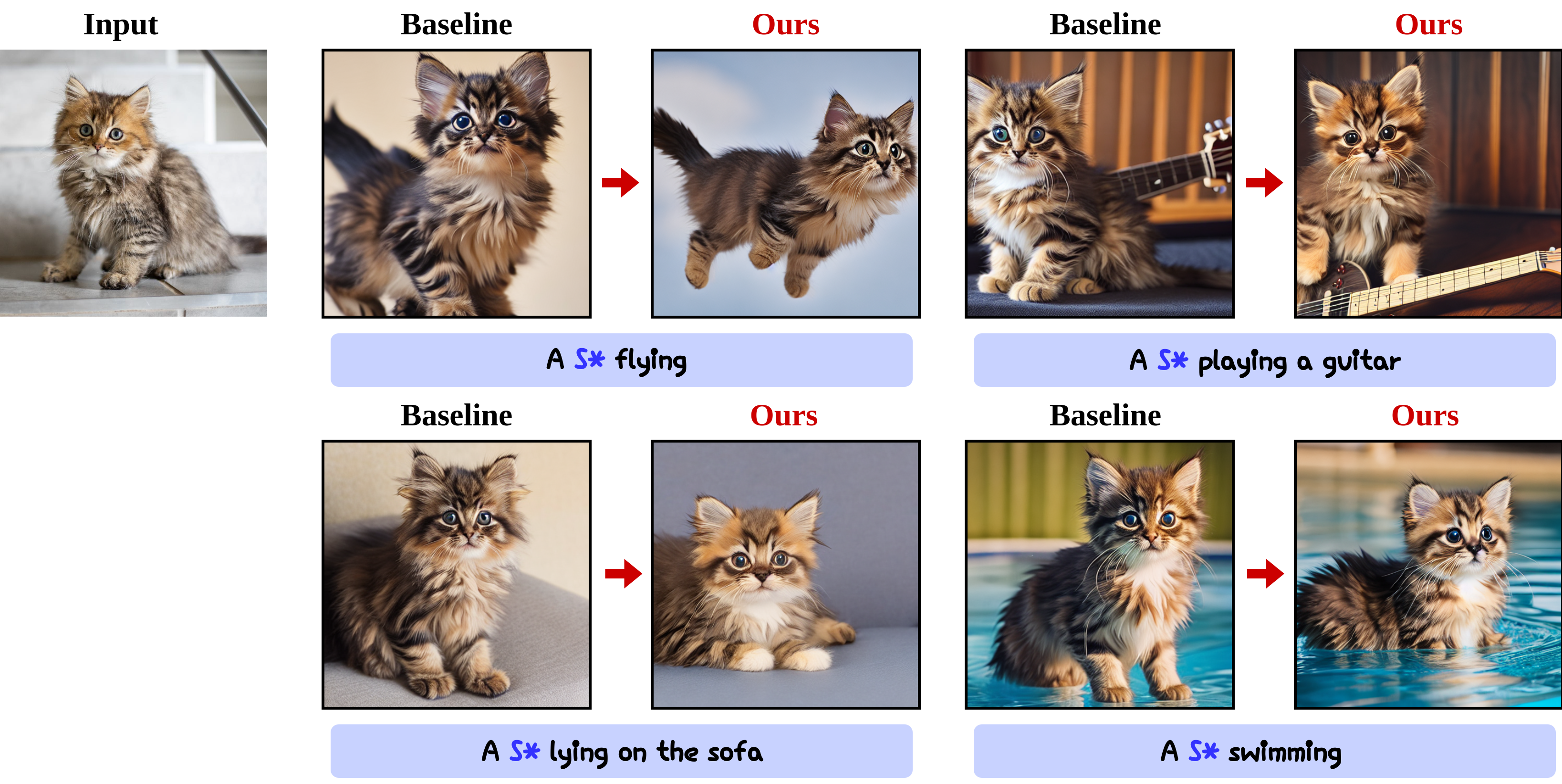

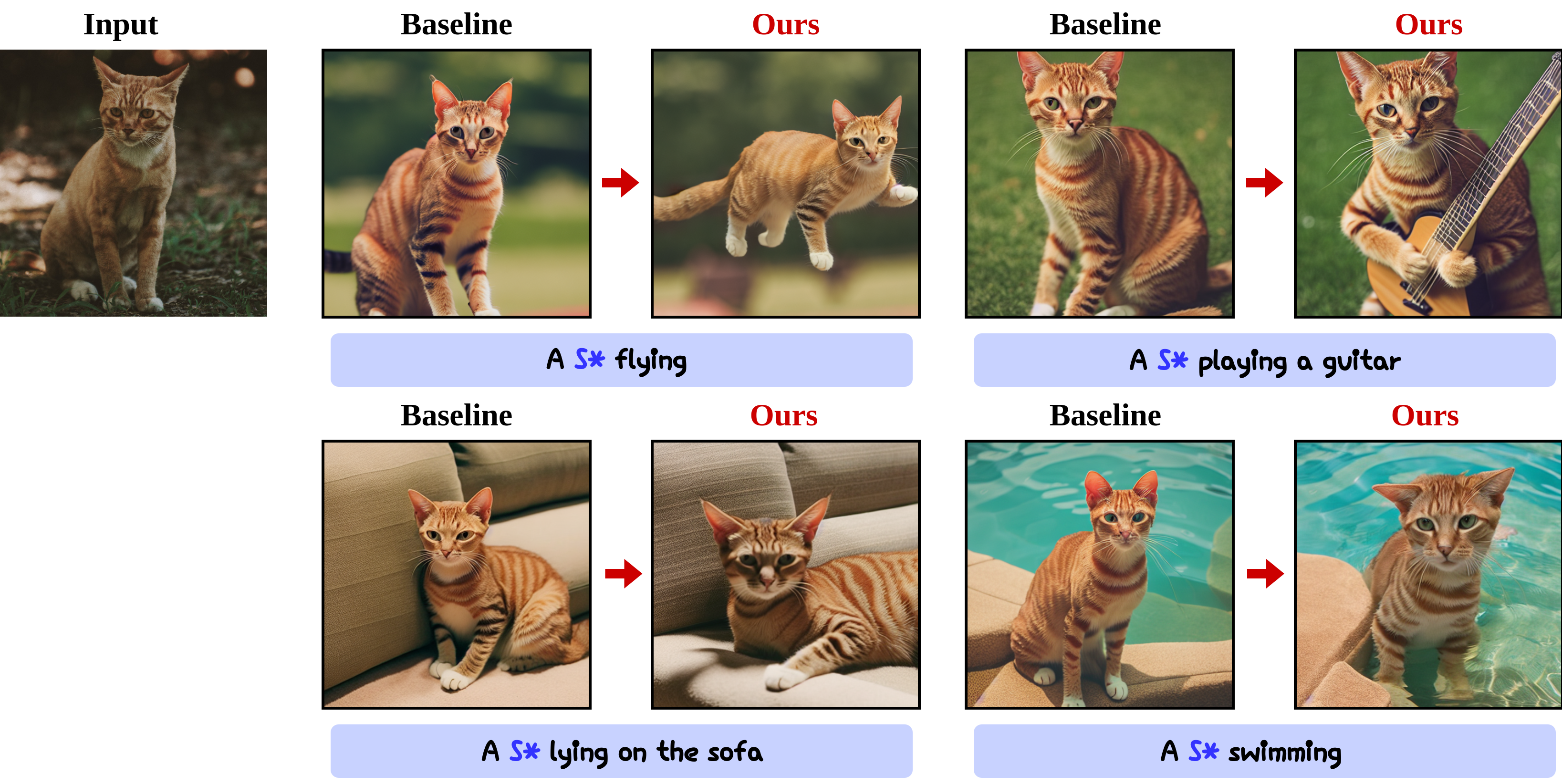

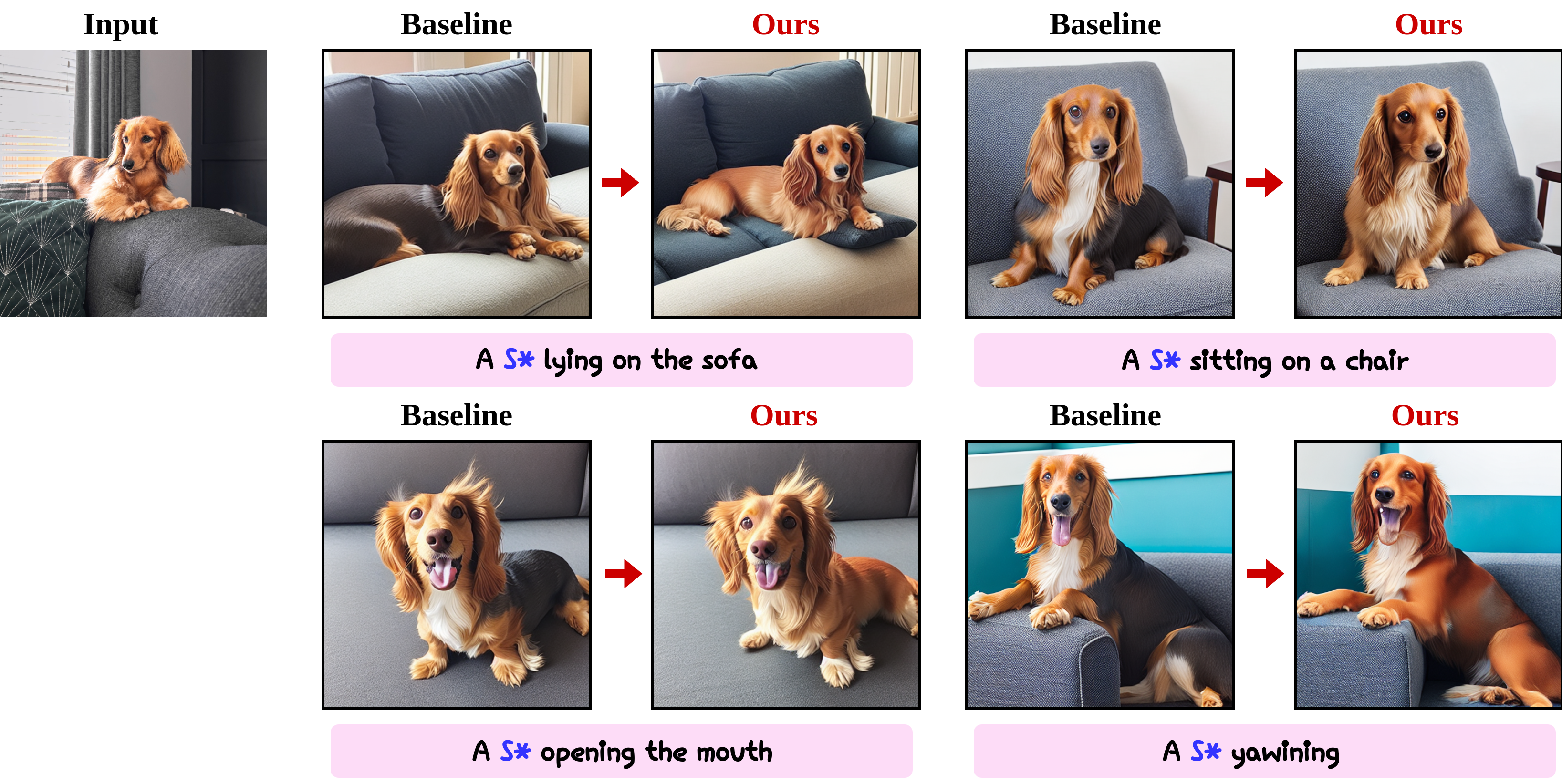

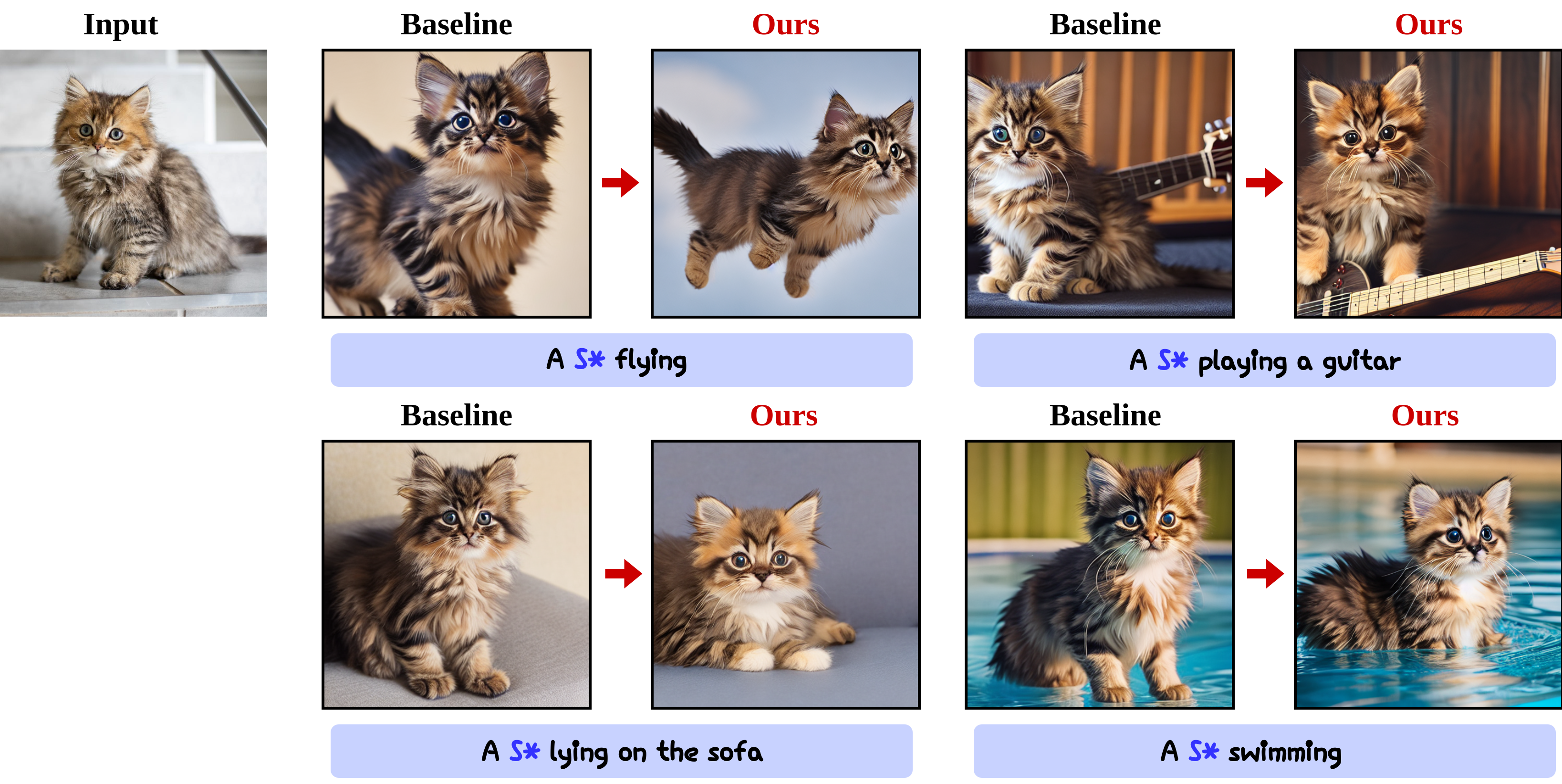

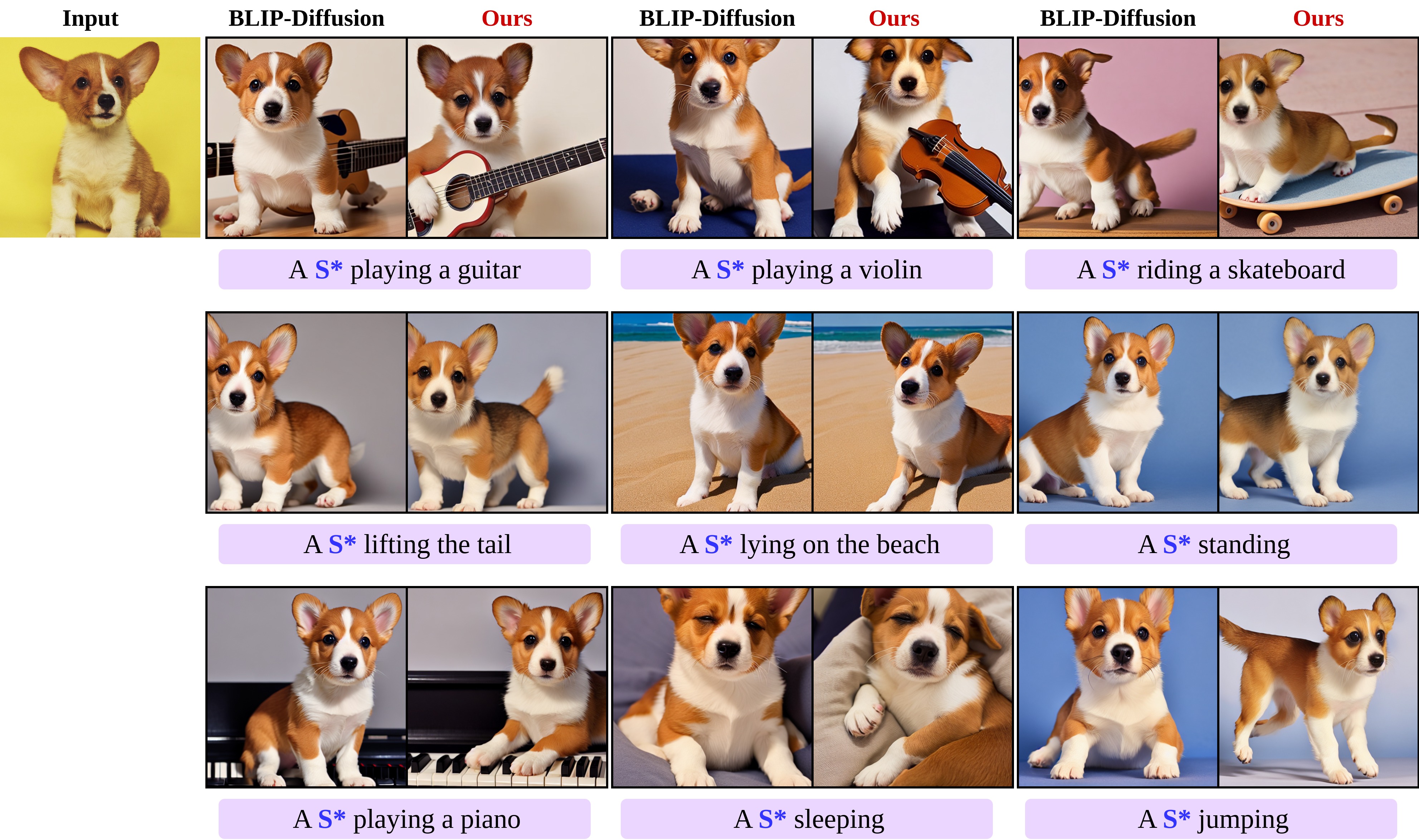

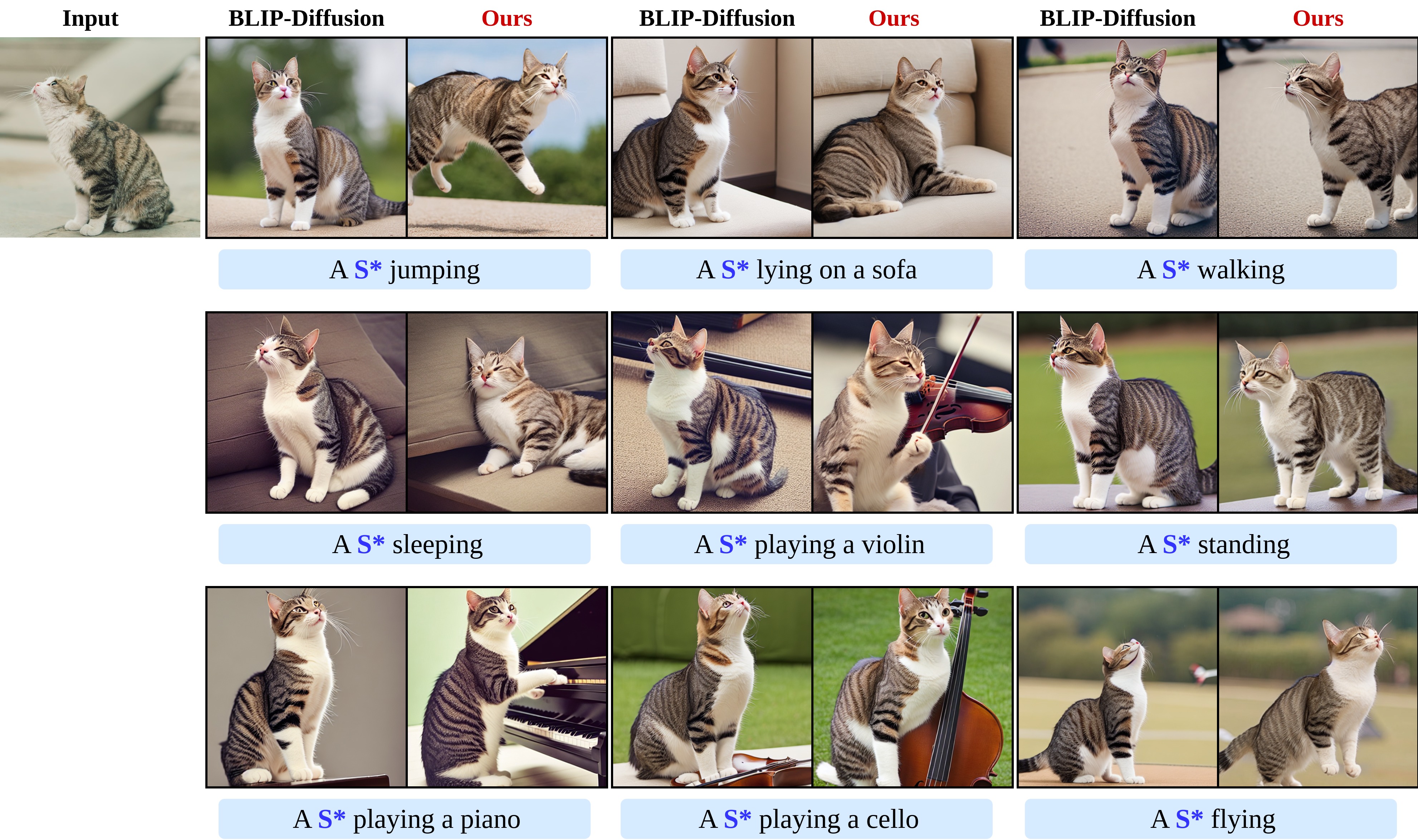

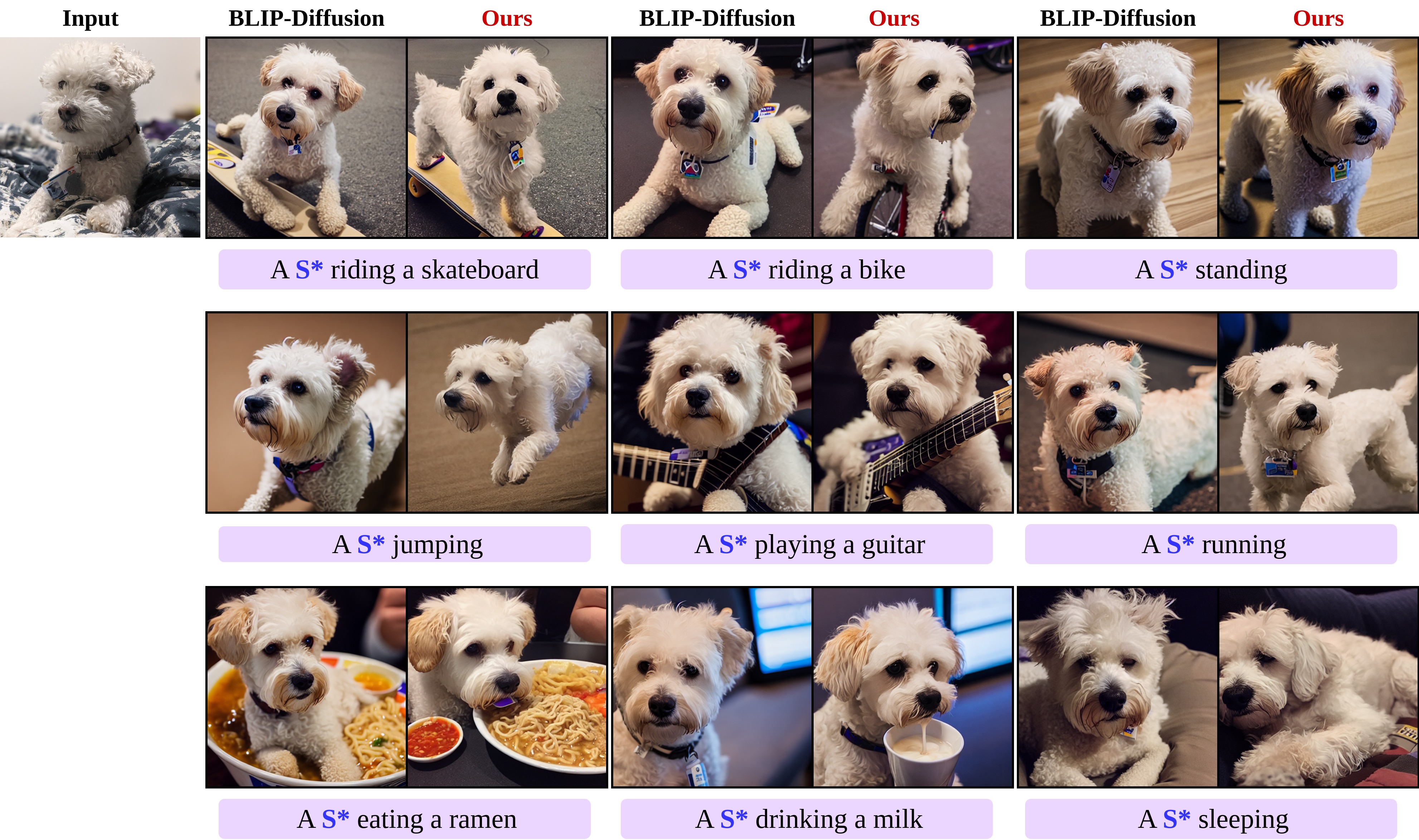

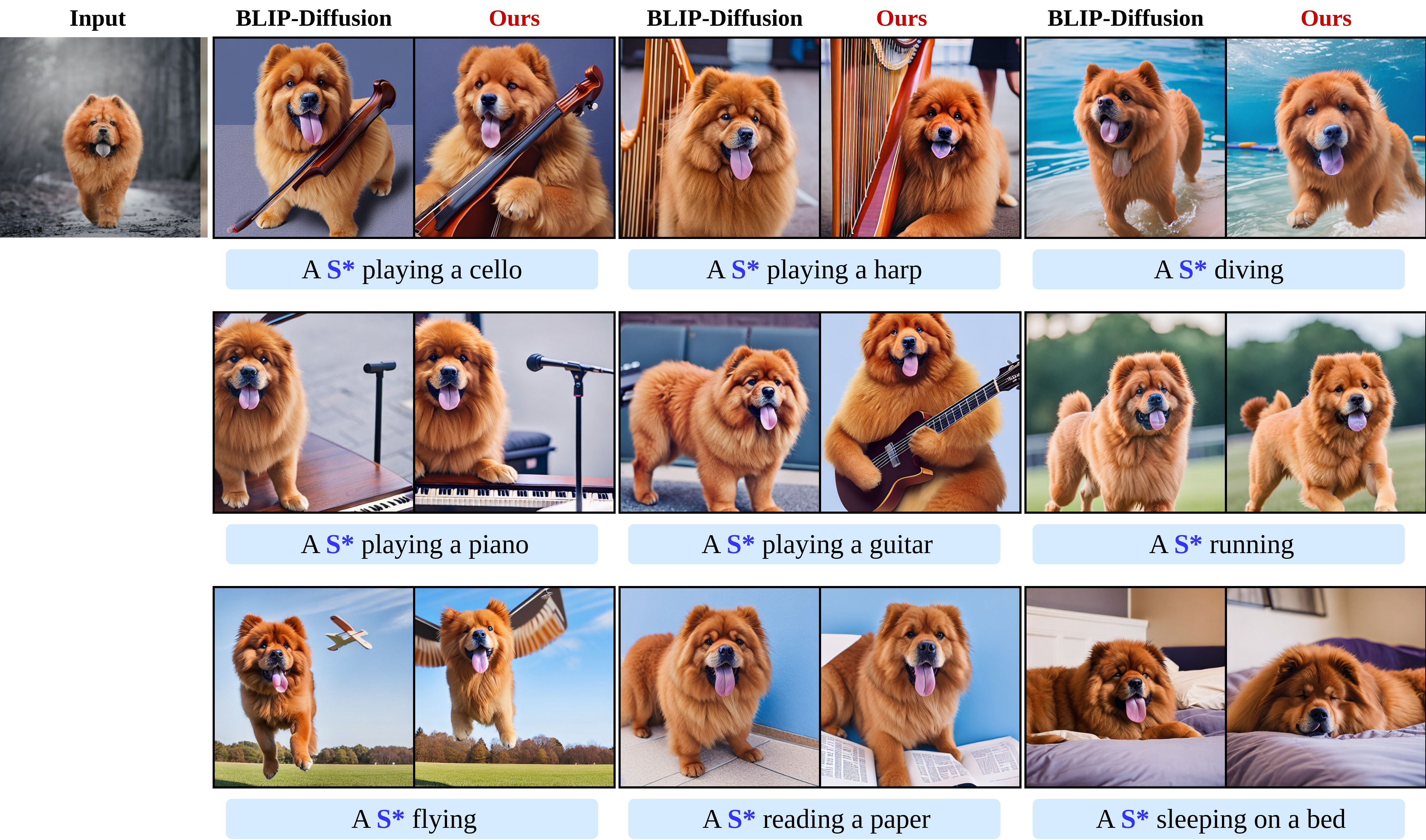

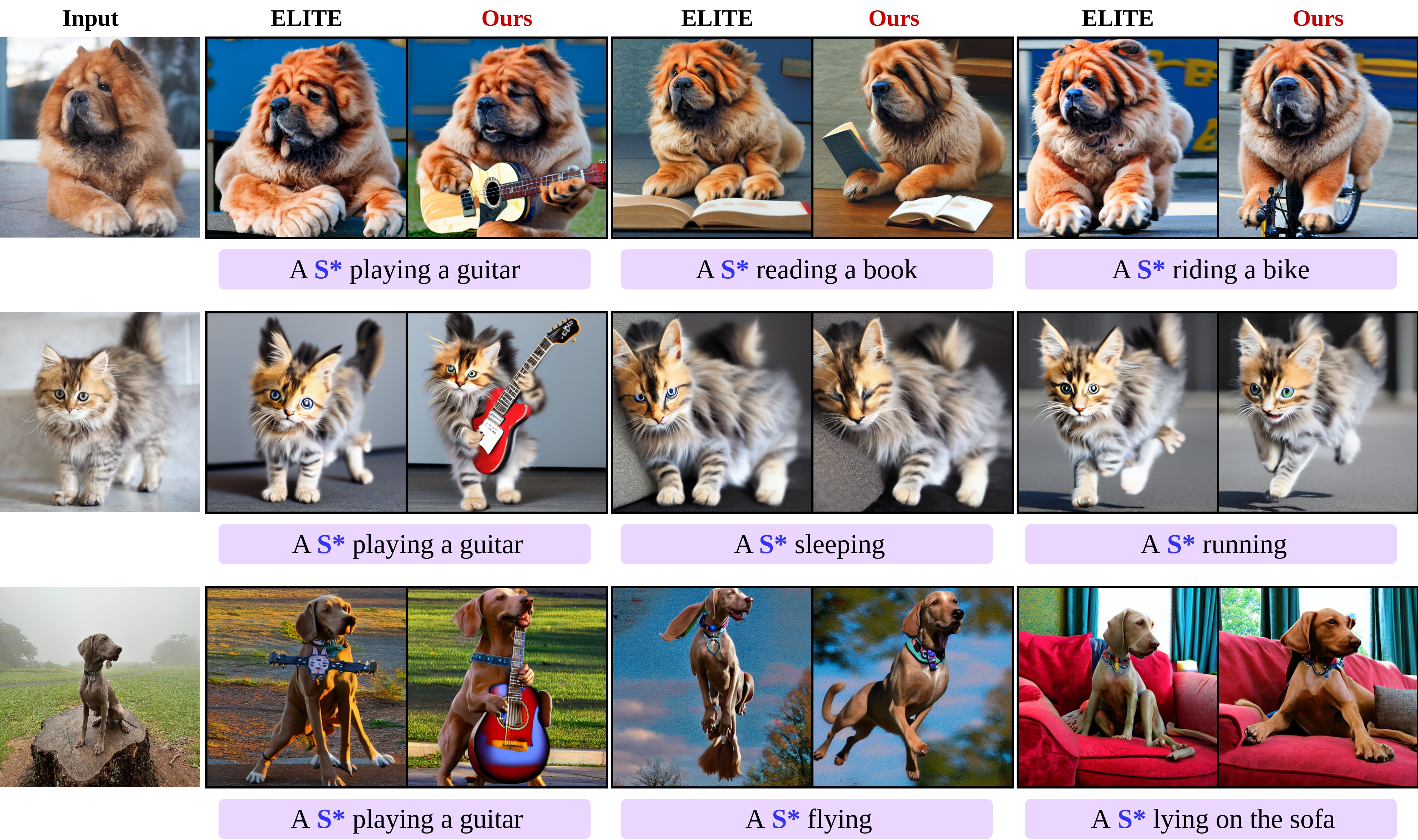

In a surge of text-to-image (T2I) models and their customization methods that generate new images of a user-provided subject, current works focus on alleviating costs incurred by a lengthy per-subject optimization. These zero-shot customization methods encode the image of a specified subject into a visual embedding which is then utilized alongside the textual embedding for diffusion guidance. The visual embedding incorporates intrinsic information about the subject, while the textual embedding provides a new, transient context. However, the existing methods often

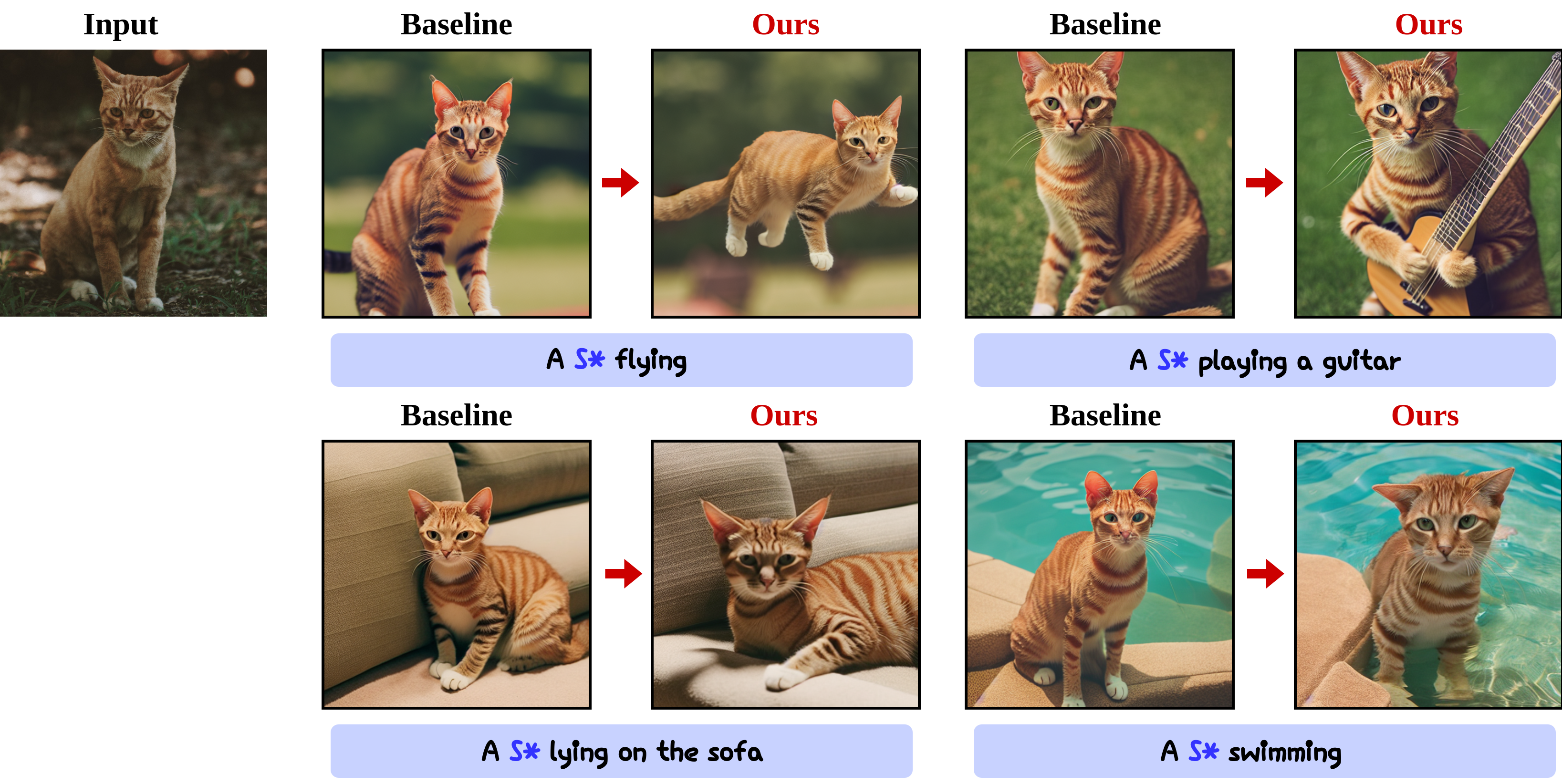

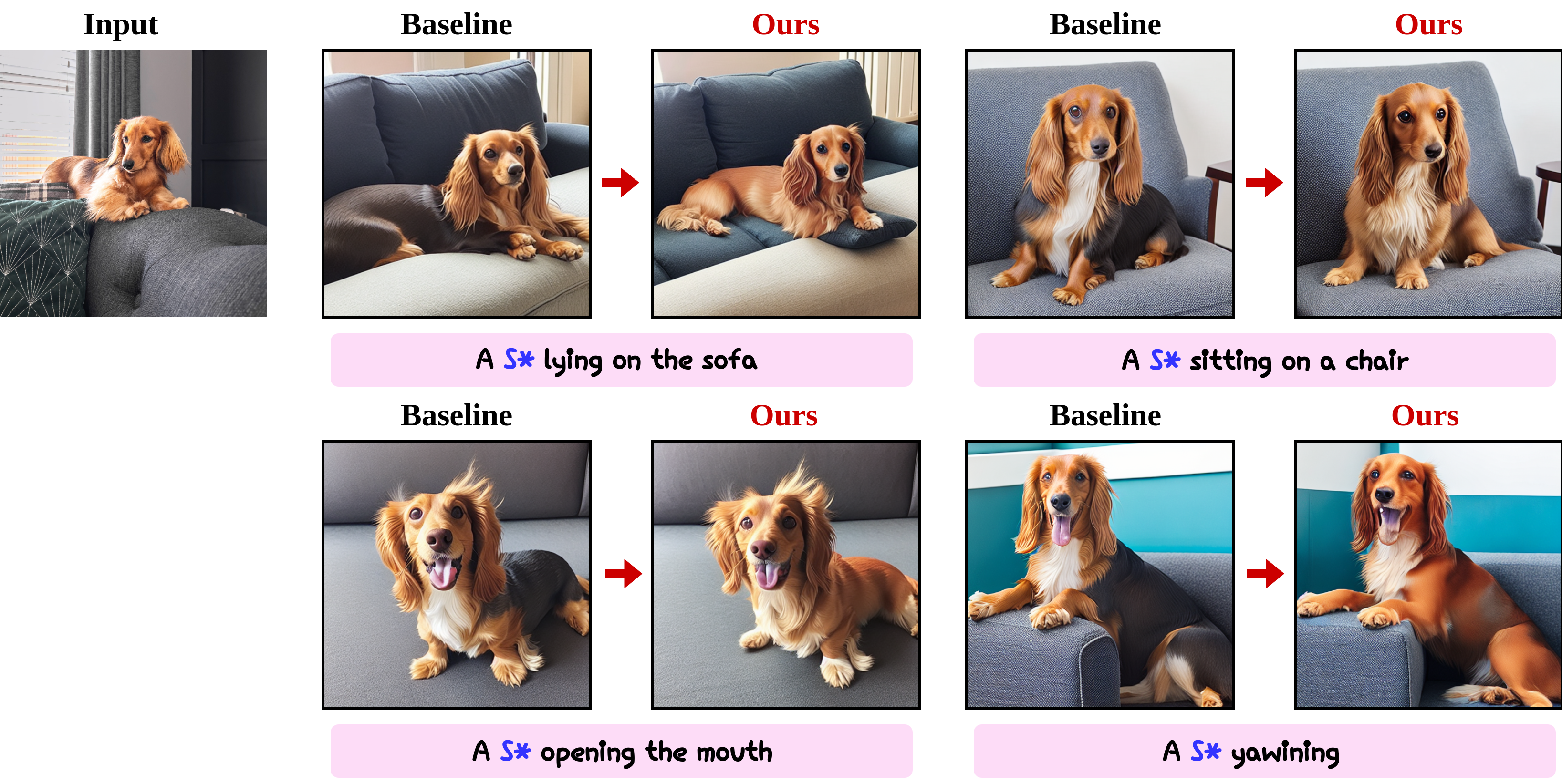

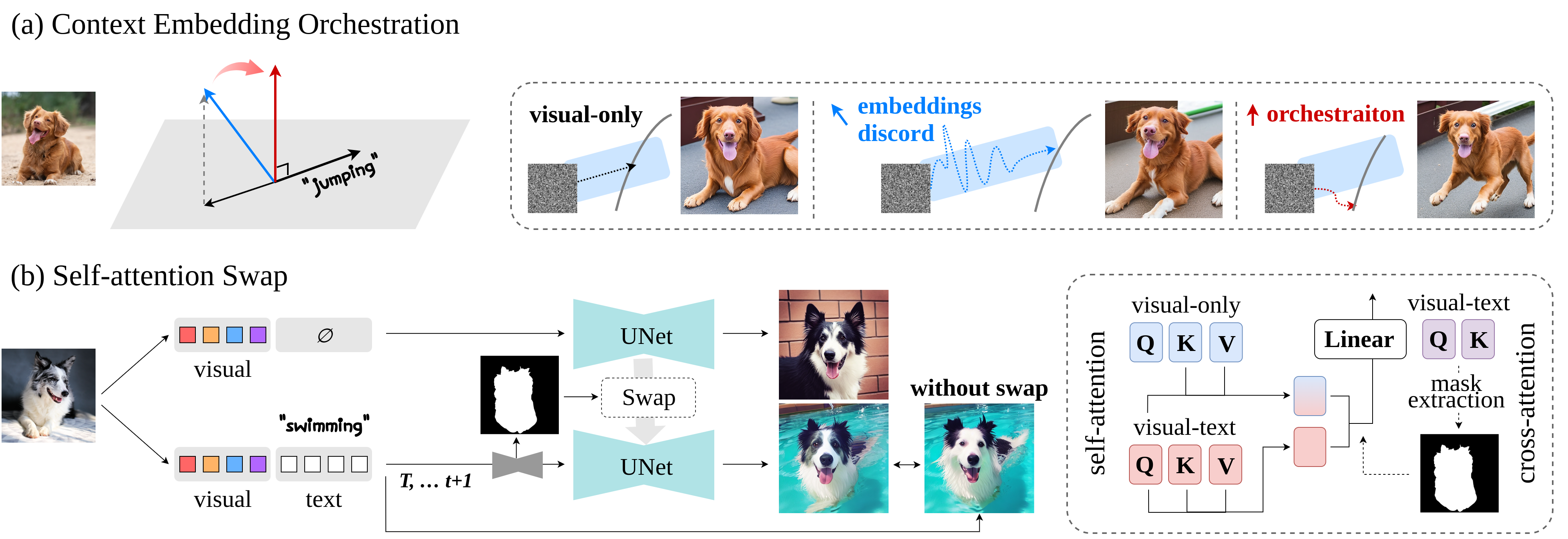

Orchestration breaks down the visual embedding into two components: one resides in the textual subspace and induces a conflict with the textual embedding, while the other is perpendicular to the textual subspace. By adopting the latter or orthogonal visual embedding, we could alleviate the interference among the contextual embeddings. Self-attention Swap adopts another denoising process guided by the visual-only embedding that fundamentally evades the collision between the visual and textual embedding. Therefore, we can aggregate clean information about the subject. Note Our method is generic and easily applicable to any zero-shot customization method that utilizes visual and textual embeddings, as it does not require an additional tuning process.

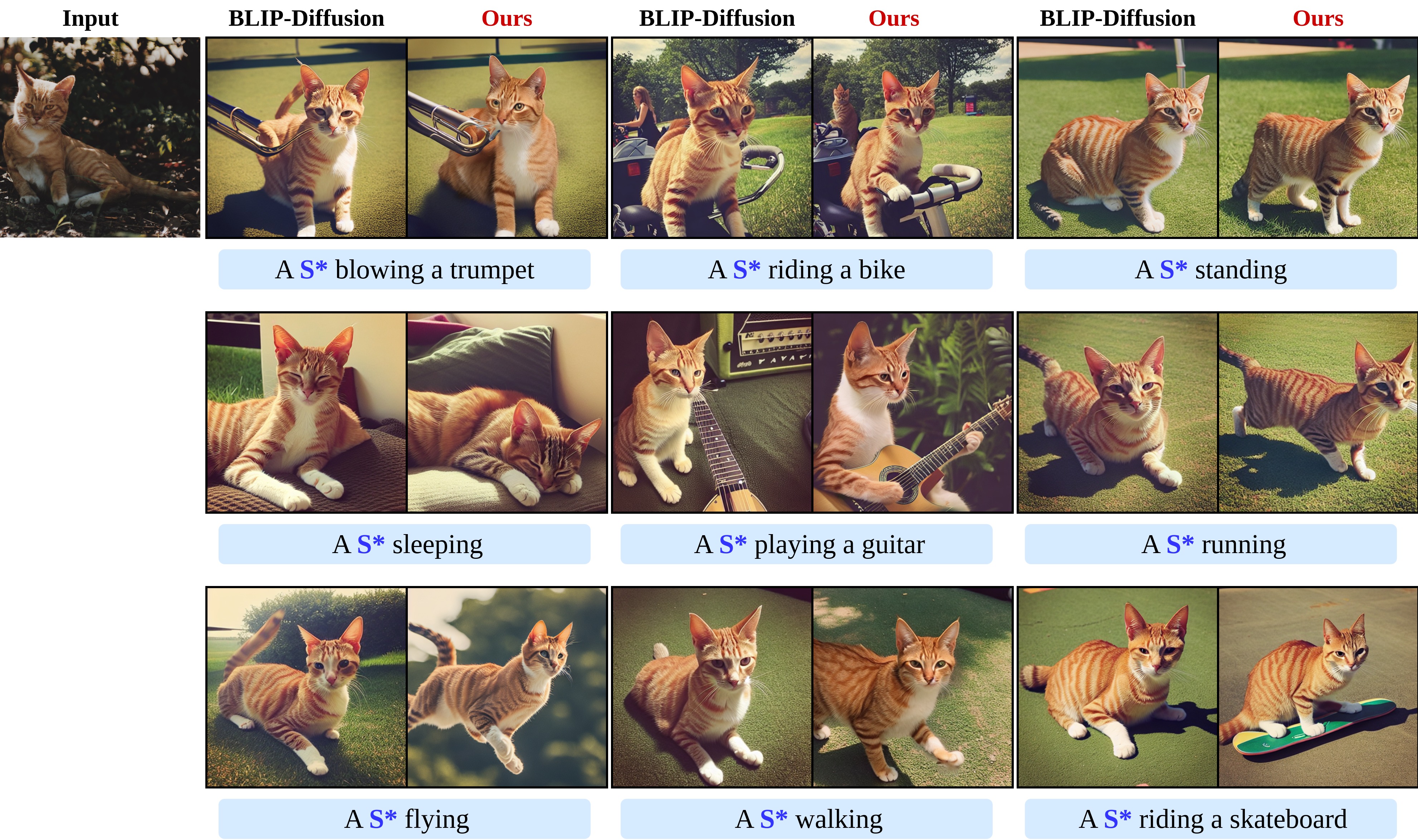

Our work builds upon the research of BLIP-Diffusion and ELITE. Many thanks for their foundational contributions.

@misc{song2024harmonizing,

title={Harmonizing Visual and Textual Embeddings for Zero-Shot Text-to-Image Customization},

author={Yeji Song and Jimyeong Kim and Wonhark Park and Wonsik Shin and Wonjong Rhee and Nojun Kwak},

year={2024},

eprint={2403.14155},

archivePrefix={arXiv},

primaryClass={cs.CV}

}